The Hall of Monty-zuma

By now, most of you probably know about the famous Monty Hall Problem, but in case you’ve been living under a rock for the last 35 years, I will provide a brief description of the situation:

Assume that a room is equipped with three doors. Behind two are goats, and behind the third is a shiny new car. You are asked to pick a door, and [you] will win whatever is behind it. Let’s say you pick door 1. Before the door is opened, however, someone who knows what’s behind the doors (Monty Hall) opens one of the other two doors, revealing a goat and asks you if you wish to change your selection to the third door (i.e., the door which neither you picked nor he opened). The Monty Hall problem is deciding whether you do.

I know. Your instincts might tell you it doesn’t matter if you switch: Either (a) your initial 1/3 probability of picking the car doesn’t change when a goat is revealed or (b) the probability of picking the car increases to 1/2 with only two unopened doors remaining. Both (flawed) logical approaches will convince you to stick with your initial selection, and you wouldn’t be alone in that opinion. But the truth is that you should switch to the last door when given the opportunity.

Why?

Well, when you pick door #1, the probability of picking the car is 1/3, leaving 2/3 probability the car is behind one of the other doors. When Monty reveals a goat behind, say, door #3 and asks if you’d like to switch your pick (to door #2), it’s “as if” you were able to travel back in time and pick both doors 2 and 3!

(Technically, this is a heuristic approach because you can’t pick two doors, but it’s a nice thought experiment that acts as an intuition pump.) Imagine you picked door #1. We’ll call that event, and the probability of finding the car behind door #1 is

. This means the probability of the car being behind door #2 or #3 is

.

Imagine Monty then reveals a goat behind door #3 and asks you if you’d like to switch doors. You should. Here, the new information doesn’t change anything in part because the probability there was a goat behind at least one of the two doors you didn’t pick is unity. But if you switched doors, you’re in the precise situation as if you’d been able to go back and pick both doors: Having chosen door #2 with a goat revealed behind door #3 and a 2/3 probability of winning the car.

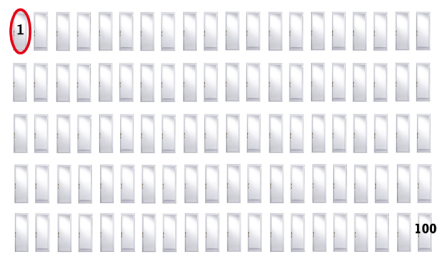

Perhaps an even better way to understand the Monty Hall Problem was given by Charles Wheelan in his 2013 book Naked Statistics: Suppose Monty shows you 100 doors and tells you there are goats behind 99 of them and a car behind one. He asks you to pick a door. You pick door #1. He then reveals the goats behind doors 2-57 and 59-100 and asks if you’d like to switch your pick to door #58. It’s painfully obvious you’d switch because doing so increases your probability of finding the car from 0.01 (i.e., getting the right door on your first guess) to 0.99, which uses the same logic and probability theory we followed when we only had three doors from which to choose. (We’ll skip the mathematical proof, but if you’re still not convinced, try it yourself!) Of course, if you still have doubts, suppose Hall showed you a trillion doors.

But here’s something crazy.

Suppose someone else from the audience (not Monty) randomly chooses a door and happens to reveal a goat—someone who didn’t know that door was hiding a goat—then the probability of picking the car remains static at 1/2 with no benefit to switching! This is completely counterintuitive (at least to me and Paul Erdös!) and even more confusing if you’ve finally accepted the probabilistic increase to 2/3 when switching in the original scenario, but we can prove it mathematically using Bayesian probability. For a primer on Bayesian probability, look here, here, and here.

Suppose Alice, a U.S. Marine, selects door #1 in the hope of revealing the car, after which a second contestant, Bob, is called to the stage to select one of the remaining doors (#2 or #3). Let Pr(O), Pr(T), Pr(R) be the probabilities of the car being behind door #1, #2, and #3, respectively, and define G to be the information there is a goat behind door #3. Further, define Pr(G|O), Pr(G|T) as the conditional probabilities that a goat is behind door #3 given the car is behind doors #1 and #2, respectively. As Bob comes to the stage to make his selection, here’s what we know:

- Pr(O) = Pr(T) = Pr(R) = 1/3. Unlike the scenario with Monty, neither Alice nor Bob knows where the car is located, so there’s an equiprobable value for finding the car.

- Pr(G|O) = 1/2. If the car is behind door #1, Bob is guaranteed to reveal a goat behind door #3 if he picks it. (Remember, Alice chose door #1, so Bob must choose between doors #2 and #3.)

- Pr(G|T) = 1/2. If the car is behind door #2, Bob has a 50-50 shot at revealing a goat behind door #3 because he might pick door #2 and win the car.

- Pr(G|R) = 0. If the car is behind door #3, there’s no way Bob can reveal a goat there.

From Bayesian probability theory,

Pr(G) := (Pr(O) x Pr(G|O)) + (Pr(T) x Pr(G|T)) +

(Pr(R) x Pr(G|R)) = (1/3)(1/2) + (1/3)(1/2) + (1/3)(0) = 1/3,

and the posterior probability is calculated as

Pr(O|G) := Pr(O) x Pr(G|O)/Pr(G) = (1/3)(3/2) = 1/2.

The reader is invited to confirm the same value for Pr(T|G). It’s interesting to note the only difference between scenarios lies with the calculation for Pr(G|T). Monty knows where the car is, and that means he would be forced to pick door #3 and reveal a goat to keep the game going—assuming, as we have, that you picked door #1. That’s not the case, however, when Bob comes to the stage in the alternate scenario. He’s not forced to choose door #3 because he’s not sure where the car is. He might choose door #2 and end the game by winning the car. That probabilistic reduction—from certainty to even odds for Pr(G|T)—is the linchpin to the transformation of the entire problem. It’s also incredibly cool that mathematics can “discern” a seismic shift in the probabilistic outcome based on whether or not the person choosing the remaining doors holds any information about what’s behind them.

The frustrating thing about Bayesian probability, however, is that it can be immune to different interpretations of the conditional probabilities. For example, if we understood Pr(G|O) and Pr(G|T) to mean strictly “the probability of a goat behind door #3 (whether it’s opened or not!) given the car is behind door #1 and door #2, respectively,” then Pr(G|O) = Pr(G|T) = 1 and we get the same answer: Pr(G) = 2/3 and Pr(O|G) = (1/3)(1/(2/3)) = 1/2. Unfortunately, that approach mirrors the faulty (i.e., ex post facto) logic that leads to the erroneous answer in the first scenario: If we calculate our chances after Monty shows us the goat behind door #3, which is what I think most people do when they’re asked to consider the problem in its original form, then we can confidently claim Pr(G|O) = Pr(G|T) = 1, leading to a posterior probability of 0.5 and no need to switch doors. Of course, the benefit to this sort of mathematical flexibility is that we can design and investigate a number of different scenarios using alternate p-values that lead ineluctably to the same (possibly counterintuitive) posterior probability.

Leave a comment